Audiobook’d version of this post ⇓

Table of Contents

- Mudge. The Twitter whistleblower

- Excerpts from the Twitter whistleblower report

- Twitter gets hacked, bigly

- Twitter had no Privacy or Chief Information Security Officer

- Mudge starts working for Twitter

- Data was insecure

- Spyware

- There was no logging of who went into the environment or what they did

- Deleted accounts are not Deactivated accounts. The word game

- Cascading data center problems: In or around the spring of 2021

- Black Swan existential threat

- Insecure development environment

- Failed Logins

- No employee computer backups

- Logdj, Zero-day vulnerability

- Unlicensed Intellectual Property. Machine learning materials for core algorithms:

- Penetration by Foreign Intelligence

- Why did twitter hide bad news?

- Volume and frequency of security incidents:

Mudge. The Twitter whistleblower

Introductory statement of Peiter Zatko at the United States Senate Judiciary Committee, September 13, 2022.

Chairman Durbin, Ranking Member Grassley, and Members of the Committee.

At your request, I appear before you today to answer questions about information I submitted in written disclosures about cybersecurity concerns I raised and observed while working at Twitter.

My name is Peiter Zatko, but I am often still called by Mudge, my online handle.

From November 2020 until January 2022, I was Twitter’s Security Lead, a senior executive role in which I was responsible for Information Security, Privacy Engineering, Physical Security, Information Technology, and Twitter Services, the company’s global support and enforcement division.

For 30 years, my mission has been to make the world better by making it more secure. As a cybersecurity expert with over a decade of senior leadership experience, I identify and balance cybersecurity vulnerabilities with business goals.

The cybersecurity vulnerabilities I deal with expose individuals, organizations, and the United States to risk and attacks that cause physical, financial, and emotional harm. I agreed to join Twitter because I believed it was a unique position in which my skills and experience could meaningfully improve the security of users, the United States, and the world.

Twitter was and continues to be one of the world’s most influential communications platforms. What happens on Twitter has an outsized effect on public discourse and our culture. I believed that improving the platform’s security would benefit not only Twitter’s millions of users, but also the people, communities, and institutions affected by the information exchanges and debates taking place on the platform.

To understand how I got here today, however, I think it is important you know about my past. Since the 1990s, I have been a pioneer in the computer and information security field, including helping to found the responsible disclosure movement, which some people refer to as “ethical hacking.”

The responsible reporting of security problems aims to inform people and institutions about cybersecurity vulnerabilities and to show them how to strengthen security.

When a responsible practitioner finds a vulnerability that bad actors can exploit, the person first makes a quiet disclosure directly to the institution, giving the affected company or government the information and the opportunity needed to fix the vulnerability. If the vulnerable institution does not want to hear the truth or fix the problem, the person reporting the problem must determine if public disclosure of the unaddressed security vulnerability is necessary to protect the public.

If the benefit of public disclosure outweighs the risk to the recalcitrant institution, then the responsible practitioner makes the public disclosure necessary to alert the public to the risk and to encourage the institution to address the vulnerability.

I continue to follow this ethical disclosure philosophy and am here today because I believe that Twitter’s unsafe handling of the data of its users and its inability or unwillingness to truthfully represent issues to its board of directors and regulators have created real risk to tens of millions of Americans, the American democratic process, and America’s national security. Further, I believe that Twitter’s willingness to purposely mislead regulatory agencies violates Twitter’s legal obligations and cannot be ethically condoned.

Given the potential harm to the public of Twitter’s unwillingness to address problems I reported and Twitter’s continued efforts to cover up those problems, I determined lawful disclosure was necessary despite the personal and professional risk to me and my family of becoming a whistleblower.

This is not the first time I have had to deal with critical cybersecurity vulnerabilities. I have advised a sitting president, administrations of both parties, Congress, and the intelligence community on these issues. In 2010, I accepted an appointed position in charge of running Cyber Programs for the Department of Defense and Intelligence Communities at DARPA; for my service, I became a decorated civilian after being awarded the medal for exceptional public service, the highest medal able to be bestowed upon a non-career civilian by the Office of the Secretary of Defense.

I then returned to the private sector and worked in senior leadership positions for companies like Motorola, Google, and Stripe, where I continued to help those companies focus on protecting companies and users from security risks.

I joined Twitter after it was infamously hacked by a group of teenagers, who launched what was then the largest hack of a social media platform in history. They took over the accounts of high-profile Twitter users as part of a crypto-currency scam.

Afterward, Twitter’s then-Chief Executive Officer, Jack Dorsey, reached out to me because of my unique breadth of experience in security, asking if I would join the company to assess the state of its security and make fundamental changes. Experience, however, has taught me that making big changes to improve security is hard. And hard changes draw intense opposition from people who profit from the status quo.

It was clear to me, however, that Jack Dorsey was committed to change, so I accepted the challenge. In doing so, I made a personal commitment to Twitter, the greater public, and to myself that I would do my best to drive the changes that Twitter – and its users and our democracy – desperately needed. I have lived by that commitment.

Upon joining Twitter, I discovered that the Company had 10 years of overdue critical security issues, and it was not making meaningful progress on them. This was a ticking bomb of security vulnerabilities. Staying true to my ethical disclosure philosophy, I repeatedly disclosed those security failures to the highest levels of the Company. It was only after my reports went unheeded that I submitted my disclosures to government agencies and regulators.

In those disclosures, I detail how the Company leadership misled its Board of Directors, regulators, and the public. Twitter’s security failures threaten national security, compromise the privacy and security of users, and at times threaten the very continued existence of the Company. I also detail that despite these grave threats,

Twitter leadership has refused to make the tough but necessary changes to create a secure platform. Instead, Twitter leadership has repeatedly covered up its security failures by duping regulators and lying to users and investors. I did not make my whistleblower disclosures out of spite or to harm Twitter. Far from that. I continue to believe in the mission of Twitter and root for its success. But that success can only happen if the privacy and security of Twitter’s users and the public are protected.

Many of the engineers and employees within Twitter have been repeatedly calling for this, but their calls are not being headed by the executive team. It became clear by Twitter’s actions that the only path to achieve that outcome was through lawful disclosure. My genuine hope is that my disclosures help Twitter finally address its security failures and encourage the Company to listen to its engineers and employees who have long reported the same issues I have disclosed.

I stand by the statements I made in my disclosures and am here to answer any questions you have about them.

Thank you.

End of the opening statement by Peter Zatko.

Author’s note.

In the following I have made small changes of punctuation and editing to fit this format and the audiobook’d form. Not all the bullets are included here and I have cited the bullet number for reference. In a few instances I have included the footnote in the bullet it cites.

At the bottom of this post I have linked to the source material.

Excerpts from the Twitter whistleblower report

Given to Congress on July 6, 2022

Twitter gets hacked, bigly

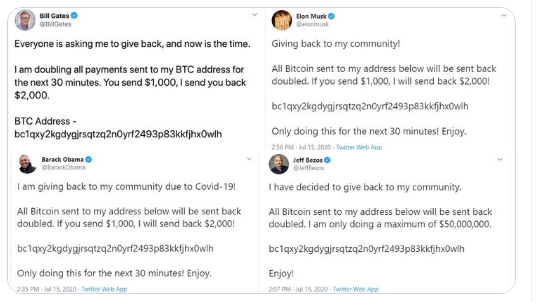

In July 2020, following nine years of supposed fixes, investments, compliance policies, and reports to the Federal Trade Commission, Twitter was hacked by a 17-year old, then-recent high school graduate from Florida and his friends.

The hackers managed to take over the accounts of former President Barack Obama, then-Presidential candidate Joseph Biden, and high-profile business leaders including, but not limited to, Jeff Bezos, Bill Gates, and Elon Musk. As part of the account takeovers, the hackers urged their tens of millions of followers to send Bitcoin cryptocurrency to an account they created.

37. The 2020 hack was then the largest hack of a social media platform in history, and triggered a global security incident. Moreover, the hack did not involve malware, zero-day exploits, supercomputers brute-forcing their way past encryption, or any other sophisticated approach.

Pretending to be Twitter IT support, the teenage hackers simply called some Twitter employees and asked them for their passwords.

A few employees were duped and complied and—given systemic flaws in Twitter’s access controls—those credentials ‘were enough to achieve “God Mode” where the teenagers could imposter-tweet from any account they wanted.

Twitter’s solution was to impose a system-wide shutdown of system access to all of its employees, lasting days. For about a month, hiring was paused and the company essentially shut down many basic operations to diagnose the symptoms, not the causes, of the hack.

38. Security experts agreed this extreme response demonstrated that Twitter did not have proper systems in place to understand what had happened, let alone remediate and reconstitute to a safe state. These failures in Twitter’s security raised alarms about more serious breaches that could occur in the future, especially because 2020 was a presidential election year. Bad actors with more sophisticated tools than what was used by a recent high school graduate could easily take advantage of Twitter’s poor security, creating detrimental consequences for the country. As aptly framed in the Wired article about this incident.

On July 28, 2020, the FTC filed a draft complaint alleging Twitter engaged in violations of a 2011 order that the FTC at that time had imposed.

Specifically the draft FTC complaint charged that from 2013 to 2019, Twitter misused users’ phone number and/or email address data for targeted advertising when users had provided this information for safety and security purposes only.

This implied Twitter still lacked basic understandings about how, what, and where its data lived, and how to responsibly protect and handle it. On May 25, 2022, the FTC announced a $150 million fine against Twitter.

Twitter had no Privacy or Chief Information Security Officer

40. At the time of the hack and the new FTC draft complaint, Twitter had neither an executive versed in information security and privacy engineering, nor even a Chief Information Security Officer.

As a result, Parag Agrawal, then Twitter’s Chief Technology Officer was the ultimate decision-maker for correcting the security vulnerabilities exposed by the hack.

41. Twitter’s CEO at the time, Jack Dorsey, realized the company had serious problems. To demonstrate he was serious about fixing things, Dorsey began recruiting Mudge. At the request of Dorsey, and with the promise of Dorsey’s support, Mudge accepted the offer and expected to spend the rest of his career at Twitter. Mudge never expected or wanted to become a whistleblower. He was convinced that the executives and board were ready to deal with long overdue security and privacy challenges.

Mudge starts working for Twitter

On November 16, 2020, four months after the hack, Mudge began his new job as Security and Integrity Lead with Twitter.

44, After arriving, Mudge spent two months performing an in-depth evaluation to understand how things worked, or didn’t work, at Twitter.

45. Mudge’s findings were dire. Nearly a decade after an earlier FTC Consent Order, with total users growing to almost 400 million and daily users totaling 206 million, Twitter had made little meaningful progress on basic security, integrity, and privacy systems.

Years of regulatory filings in multiple countries were misleading, at best. In many ways, the situation was even worse than the CEO Jack Dorsey feared, as the company haphazardly expanded into contentious international areas without even following existing, albeit deficient, corporate policies.

46. Mudge’s reports, all highly-experienced experts and intimately familiar with Twitter’s problems with the FTC, told Mudge unequivocally that Twitter had never been in compliance with the 2011 FTC Consent Order, and was not on track to ever achieve full compliance. Twitter’s deficiencies are described in greater detail later, and in the exhibits.“

Data was insecure

But at a high level, Mudge found serious deficiencies in:

a. Privacy, including

i. Ignorance and misuse of vast internal data sets, with only about 20% of Twitter’s huge data sets registered and managed.

ii. Mishandling Personally Identifiable Information (PI), including repeated marketing campaigns improperly based on user email addresses and phone numbers designated for security purposes only.

iii. Misusing security cookies for functionality and marketing.

iv. Misrepresentations to the FTC on these matters.

b. Information Security (InfoSec), including:

i. Server vulnerabilities, with over 50% of Twitter’s 500,000 data center servers with non-compliant kernels or operating systems, and many unable to support encryption at rest.

ii. Employee computers exposed, with over 30% of devices reporting they had disabled software and security updates.

ii. No Mobile Device Management (MDM) for employee phones, leaving the company with no visibility or control over thousands of devices used to access core company systems.

iv. Insider Threats were virtually unmonitored, and when found the company did not take corrective actions;

c. Fundamental architecture including:

i. lack of development and testing environments for all software

development and testing, highly anomalous for a large tech company, where engineers use live production data and test directly on the ‘commercial service, leading to regular service disruptions.

ii. serious access control problems, with far too many staff (about half of Twitter’s 10,000 employees, and growing) given access to sensitive live production systems and user data in order to do their jobs, the subject of specific misrepresentations in 2020 by then-Chief Technology Officer Parag Agrawal.

iii. Insufficient data center redundancy,” withouta plan to cold-boot or recover from even minor overlapping data center failure, raising the risk of a brief outage to that of a catastrophic and existential risk for Twitter’s survival.

Spyware

Twitter employees were repeatedly found to be intentionally installing spyware on their work computers at the request of external organizations. Twitter learned of this several times only by accident, or because of employee self-reporting.

In other words, in addition to a large portion of the employee computers having software updates disabled, system firewalls turned off, and remote desktop enabled for non-approved purposes, it was repeatedly demonstrated that until Twitter leadership would stumble across end-point, employee computer, problems, external people or organizations had more awareness of activity on some Twitter employee computers than Twitter itself had.

47. Unsurprisingly, given these and other deficiencies, Twitter suffered from an anomalously high rate of security incidents —approximately one security incident each week serious enough that Twitter was required to report it to government agencies like the FTC and SEC, or foreign agencies like Ireland’s Data Protection Commission.

In 2020 alone, Twitter had more than 40 security incidents, 70% of which were access control-related. These included 20 incidents defined as breaches; all but two of which were access control related. Mudge identified there were several exposures and vulnerabilities at the scale of the 2020 incident waiting to be discovered and reasonably feared Twitter could suffer an Equifax-level hack.

48. Mudge did not want any employees accessing, or potentially damaging the production environment. It was at this point when he learned that it was impossible to protect the production environment. All engineers had access.

There was no logging of who went into the environment or what they did

When Mudge asked what could be done to protect the integrity and stability of the service from a rogue or disgruntled engineer during this heightened period of risk he learned it was basically nothing. There were no logs, nobody knew where data lived or whether it was critical, and all engineers had some form of critical access to the production environment.

50. Defensiveness and denial from Agrawal: Even at the first executive team meeting where Mudge shared his initial findings, Mudge got stiff pushback. In particular, Twitter’s CTO Parag Agrawal vehemently challenged Mudge’s assessment that Twitter faced a non-negligible existential risk of even brief simultaneous, catastrophic data center failure, and had no workable disaster recovery plan.

Twitter’s most senior engineers had told Mudge they did not know whether, or on what time frame, Twitter could recover from such an outage. Perhaps Agrawal’s defensiveness should not have been surprising—as a senior engineer later promoted to then Chief Technology officer for years, Twitter’s problems had developed under Agrawal’s watch.

51. Mudge was shocked to learn that even a temporary but overlapping outage of a small number of datacenters would likely result in the service going offline for weeks, months, or permanently. This was even more disturbing as small outages were not uncommon due to bad software pushes from the engineers.

On top of this all engineers had some form of access to the data centers, the majority of the systems in the data centers were running out of date software no longer supported by vendors, and there was minimal visibility due to extremely poor logging.

This meant that of the four threats Mudge had cited, what would normally be viewed as the least surprising, and was the statistically most likely, issue carried the greatest ‘damage to the company; an existential company ending event.

Deleted accounts are not Deactivated accounts. The word game

53. In years past, the FTC had asked Twitter whether the data of users who canceled their accounts was property “deleted.”

Twitter had determined that not only had the data not been properly deleted, but that data couldn’t even be accounted for. Instead of answering the question that was asked, Twitter assured the FTC that the accounts were “deactivated,” hoping FTC officials wouldn’t notice the difference.

Mudge learned about this historical practice in 2021, and was told that fines could be $3 million each month plus 2% of revenue.

54. Twitter did not actively monitor what employees were doing on their computers. Although against policy. it was commonplace for people to install whatever Software they wanted on their work systems.

Cascading data center problems: In or around the spring of 2021

56. Twitter’s primary data center began to experience problems from a runaway engineering process, requiring the company to move operations to other systems outside of this datacenter. But, the other systems could not handle these rapid changes and also began experiencing problems. Engineers flagged the catastrophic danger that all the data centers might go offline simultaneously.

A couple months earlier in February, Mudge had flagged this precise risk to the Board because Twitter data centers were fragile, and Twitter lacked plans and processes to cold boot, this meant that if all the centers went offline simultaneously, even briefly, Twitter was unsure if they could bring the service back up. Downtime estimates ranged from weeks of round-the-clock work, to permanent irreparable failure.

Black Swan existential threat

57. In or about Spring of 2021, just such an event was underway, and shutdown looked imminent. Hundreds of engineers nervously watched the data centers struggle to stay running.

The senior executive who supervised the Head of Engineering, aware that the incident was on the verge of taking Twitter offline for weeks, months or permanently, insisted the Board of Directors be informed of an impending catastrophic Black Swan event. Board Member name redacted responded with words to the effect of Isn’t this exactly what Mudge warned us about?

Mudge told name redacted that he was correct. In the end, Twitter engineers working around the clock were narrowly able to stabilize the problem before the whole platform shut down.’

Insecure development environment

A fundamental engineering and security principle is that access to live production environments should be limited as much as possible. Engineers should mostly work in separate development test, and or staging environments using test data and not live customer data.

Over a decade prior companies like Google moved development to segregated test systems. But at twitter, engineers built, tested, and developed new software directly in production with access to live customer data and other sensitive information in Twitter’s system.

This ongoing arrangement, almost unheard of at modern tech companies, causes repeated problems for Twitter in bad software deployments and significantly reduces the work an attacker needs to do to acquire credentials with extremely sensitive access.

58. Software Development Life Cycle is abbreviated to SDLC. An SDLC is a uniform process to develop and test software, and a basic best practice for engineering development at commercial companies. Twitter’s need to implement an SDLC was more than a best practice, it had been required since the 2011 FTC Consent Order and reported regularly to the Board of Directors.

In or around May 2021, Mudge instructed that the Board Risk Committee receive accurate data showing that the company only had a template for the SDL, not even a functioning process, and by Q2 2021 that template had only been rolled out for roughly 8 to 12% of projects.

59. Board member name redacted became incensed and noted that for years the board had been hearing “the SDLC effort was getting closer to being complete. Board member name redacted realized that he and the Board had been misled, and was not happy.

After the meeting, an executive called Mudge to state that he and Agrawal were upset with Mudge for providing accurate information to the Risk Committee, and that he and Agrawal deserved credit for their efforts. The call was a turning point for Mudge. He realized that for years, Agrawal and other executives had been misleading the board by reporting their efforts, not actual results.

Failed Logins

64. In or around August 2021, Mudge notified then-CTO Agrawal and others that the login system for Twitter’s engineers was registering, on average, between 1500 and 3000 failed logins every day, a huge red flag. Agrawal acknowledged that no one knew that, and never assigned anyone to diagnose why this was happening or how to fix it.

No employee computer backups

65. In or around Q3 or Q4 2021, Mudge learned that no Twitter employee computers were being backed up at all. Supposedly, Twitter’s IT department had managed a backup system for years, but it had never been tested and Mudge learned that it was not functioning correctly. Obviously this raised fundamental risks for corporate data integrity, including financial data, for any information needing to be recovered that was located exclusively on employee laptops.

To the extent that financial staff’s data was at risk, it could constitute material weaknesses in internal financial controls required under SEC regulations.

Other Twitter executives—aware that the company was chronically out of compliance with most government requests for information—tried to look on the bright side, noting that going forward Twitter would have a valid excuse for not responding to regulator queries about which data particular employees had access to on which days. They explicitly decided not to replace or fix the employee backup system, but instead discontinued the service entirely.

66. Knowing that employees often had actual data from production systems on their laptops several executives and leaders commented to the effect of “this is actually a good thing because it means we cannot comply with [legal requests] and have less exposure.”

Logdj, Zero-day vulnerability

69. In December 2021, the world discovered that Logdj, a very common piece of software deployed in hundreds of applications across hundreds of millions, or billions of computers worldwide, contained a previously-unrecognized zero-day ‘security vulnerability. Overnight, a huge number of computers around the globe needed patching, or else they would be easy for adversaries to exploit. Left unaddressed, Logdj lets hackers break into systems, steal passwords and login information, extract data, and infect networks with malicious software.

Logdj was already actively being exploited to compromise computers worldwide by criminals and governments alike. As the most severe computer vulnerability in years,

The FTC instructed companies to pursue remediation, and that they could request detailed explanation and data on a company’s Log d j remediation efforts. In January 2022, Mudge determined and reported to the executive team that because of poor engineering architecture decisions that preceded Mudge’s employment Twitter had over 300 corporate systems and upwards of 10,000 services that might still be affected, but Twitter was unable to thoroughly assess its exposure to Log d j, and did not have capacity, if pressed in a formal investigation, to show to the FTC that the company had properly remediated the problem.

Unlicensed Intellectual Property. Machine learning materials for core algorithms:

70. In January 2022, in the days before he was terminated, Mudge learned that Twitter had never acquired proper legal rights to training material used to build Twitter’s key Machine Learning models. The Machine Learning models at issue were some of the core models running the company’s most basic products, like which Tweets to show each user. Days before Mudge was fired in January 2022, Mudge leamed that Twitter executives had been informed of this glaring deficiency several times over the past years, yet they never took remedial action.

71. Misleading regulators in multiple countries: When, years earlier, the FTC had asked questions about the training material used to build Twitter’s machine learning models, Twitter realized that truthful answers would implicate the company in extensive copyright / intellectual property rights violations.

Twitter’s strategy, which executives explicitly acknowledged was deceptive, was to decline to provide the FTC with the requested training material, and instead pointed the FTC towards particular models that would not expose Twitter’s failure to acquire appropriate IP rights. In early 2022, the Irish-DPC and French-CNIL were expected to ask similar questions, and a senior privacy employee told Mudge that Twitter was going to attempt the same deception.

Unless circumstances have changed since Mudge was fired in January, then Twitter’s continued operation of many of its basic products is most likely unlawful and could be subject to an injunction, which could ‘take down most or all of the Twitter platform. Before Mudge could dig deeper into this issue he was terminated.

Penetration by Foreign Intelligence

72. Over the course of 2021, Mudge became aware of multiple episodes suggesting that Twitter had been penetrated by foreign intelligence agencies and/or was complicit in threats to democratic governance, including:

Twitter executives opted to allow Twitter to become more dependent upon revenue coming from Chinese entities even though the Twitter service is blocked in China.

After Chinese entities paid money to Twitter, there were concerns within Twitter that the information the Chinese entities could receive would allow them to identify and learn sensitive information about Chinese users who successfully circumvented the block, and other users around the world.

Twitter executives knew that accepting Chinese money risked endangering users in China where employing VPNs or other circumvention technologies to access the platform is prohibited and elsewhere. Twitter executives understood this constituted a major ethical compromise. Mr. Zatko was told that Twitter was too dependent upon the revenue stream at this point to do anything other than attempt to increase it.

Why did twitter hide bad news?

73. In none of these cases did Twitter choose to focus on the long term health of the platform and company. Mudge’s inference from these and other episodes was that some senior executives were intent on hiding bad news, or even misrepresenting it, instead of trying to fix it.

This was possibly because:

(a) executives had personal financial incentives to grow mDAU / active users;

or (b) they didn’t know any better;

or (c) some of them had built the broken system in the first place.

75. As of the date of Mudge’s termination, January 19, 2022, Twitter remained out of compliance in multiple respects with the 2011 FTC Consent Order, which has the force of law following Twitter’s consent to its terms. While the company had made progress on privacy because of Mudge’s leadership, it was not on track to ever achieve compliance for other important items, especially in the area of information ‘security in which Mudge’s reform efforts had been repeatedly and unreasonably blocked.

76. Dorsey Out, Agrawal In: In November 2021, Twitter announced that Dorsey was stepping down, and would be replaced by Agrawal, effective November 29.

80. At a high level, Mudge flagged four issues which were improperly omitted or presented in a misleading way:

Basic security protections on software and system patching. The materials reported a misleading statistic that 92% of employee ‘computers had security software installed, implying those computers were secure. In fact, that software’s most basic function was to determine whether that particular computer’s software and settings met basic security standards —and the software was reporting that one-third of the systems.were critically insecure.

A full 30% of employee systems were reporting that they had disabled critical safety settings such as software updates. Other critical flaws reported by the software brought this number closer to 50%. This crucial context was not included for the Board.

Access control to systems and data:

On the issue that led to the FTC complaint in 2011 and the July 2020 hack, the materials did not contain the overall numbers, which were getting worse. Instead, the materials took a small, cherry-picked subset of data that could be made to look like a positive trend, and turned that into a graph with a downward trajectory.

The graph misleadingly suggesting that Twitter was making significant progress in reducing access to production systems. Mudge knew that the actual underlying data showed that at the end of 2021, 51% of the 11 thousand full-time employees, about 5,000 employees, had privileged access to Twitter’s production systems, a 5% increase from the 46% of total employees in February of 2021.

Volume and frequency of security incidents:

A graphic in the document ‘showed only a subset of security incidents, presented as if to encompass all security incidents.”’ Twitter’s actual total number of security incidents in 2021 was closer to 60, a marked difference from what was being implied. The misleading graphic also attributed only 7% of incidents to access control, when in reality access control was the root cause of 60% of security incidents.

Lack of Software Development Life Cycle (or related processes and compliance), was presented as largely completed, instead of still in the initial phases of planning.

111. For years, across many public statements and SEC filings, Twitter has made material misrepresentations and omissions, and engaged in acts and practices operating as deceit upon its users and shareholders, regarding security, privacy and integrity.

114. Twitter senior leadership have known for years that the ‘company has never held proper licenses to the data sets and/or software used to build some of the key Machine Learning models used to run the service. Litigation by the true owners of the relevant IP could force Twitter to pay massive monetary damages, and/or obtain an injunction putting an end to Twitter’s entire Responsible Machine Learning program and all products derived from it. Either of these scenarios would constitute a Material Adverse Effect on the company.

Authors addendum:

- Twitter has said Zatko’s complaint is riddled with inaccuracies.

- On August 9th, a former Twitter employee was found guilty of spying on Saudi dissidents using the social media platform, identifying critics of the Saudi monarchy who had been posting under anonymous Twitter handles, and supplying the information to Prince Mohammed’s aide Bader al-Asaker.

- On November 2nd, 2017, President Donald Trump’s Twitter account disappeared from the site for around 11 minutes. In a series of tweets issued by Twitter’s Government and Elections team, the company first blamed “human error”, then attributed the move on a rogue employee who used their last day on the job to boot the president off the service. The ex-Twitter employee responsible was working for Twitter as a contractor with a firm named Pro Unlimited. Twitter said “We have implemented safeguards to prevent this from happening again. We won’t be able to share all details about our internal investigation or updates to our security measures, but we take this seriously and our teams are on it.”

The end?